Interview Tests Considered Harmful

Coding tests in programming job interviews have come under a lot of criticism lately, most curtly in this tweet that went viral:

Google: 90% of our engineers use the software you wrote (Homebrew), but you can't invert a binary tree on a whiteboard so fuck off.

As a bit of a contrarian, I set out to write an article defending the practice of skill testing coders in job interviews. I even took the unusual step of cataloguing all of the coding interviews I failed, lest somebody accuse me of defending tests just because I happen to test well.

The working title of this article was "In defence of coding interview tests". But I concluded that their position is indefensible. In fact you can completely eliminate interview tests by adopting an agile methodology to hiring coders.

Traditional Coding Tests

The good, the bad, and the ugly.

By now most seem to agree that brain teasers and puzzles with little or no relation to programming (how many golf balls can you fit in a 747?) have proven useless. I'll dismiss these as "the ugly", and instead look at two other kinds of interview tests that are still popular, the good and the bad.

Easy Test Good

A simple and quick coding test like Fizz Buzz still holds up to scrutiny. Like it or not, it is an excellent screening tool:

It is extremely cheap to run.

All it takes is a laptop and 5-10 minutes of time, including the discussion of the solution, which easily gets into important topics like maintainability, requirements gathering, programming styles, etc.

Its accuracy is good enough.

There are certainly those who pass Fizz Buzz, but would not be a good programming hire. And perhaps there are those who fail it, but would. But while I admit that I have no proof, I just can't shake the sense that Fizz Buzz is accurate enough considering how cheap it is.

Hard Test Bad

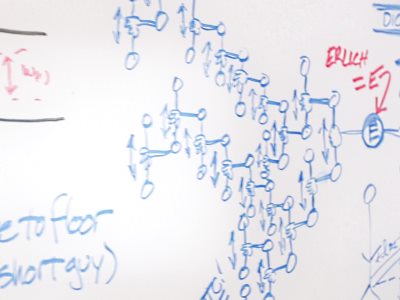

At the other extreme are the needlessly difficult tests, like the aforementioned inversion of a binary tree (min-to-max, not left-to-right) that was asked of Mr. Howell, or, to stick with binary trees, iterative traversal in post-order that was asked of me when I was a new grad.

It is no accident that both of these tests were administered on a whiteboard. Whiteboard coding is more hazing than programming. And even hazing is supposed to be an initiation ritual administered only after the candidate has already been accepted.

The Myth of the brilliant algorithm

The rationalization I heard for these tests is: "They are looking for the person who will come up with the next big thing, and this person will be the kind of algorithmic genius unseen since Edsger Dijkstra"

. Even if we assume that this brilliant mathematician would not falter in such a high-pressure situation, the fact remains that very few real businesses are founded on brilliant "middle out" algorithms.

Google probably comes the closest, having succeeded by building a much better search algorithm. But even there the real epiphany - that external links to a page are more relevant than its textual content - was distinctly non-mathematical. As a coder I don't like to concede this, but: Brilliant code is overrated.

But good code is underrated. Plenty of businesses are hindered and even ruined by bad code, and most aren't even aware of it happening.

Good code is rarely flashy. It is maintainable, it is adaptable, it is readable, and in retrospect it looks like it was easy to make. But it wasn't. You just can't tell how well-made it is, because you don't have a badly-made alternative next to it for comparison.

Modern Coding Tests

In recent years many organizations big and small have greatly improved their programming tests, by making them look more like actual programming work:

Featuring the kind of task that will be performed on the job.

Using the tools that will be used on the job.

Having the interviewer act like a colleague, not like a judge.

Some enterprising companies have taken this even a step further. They turned the coding test into a paid day of doing actual work, programming in pair with the interviewer.

I think we can go a step further yet.

But first let's step back and evaluate.

Why is this so Complicated?

Even with all the recent improvements to coding tests, horror stories persist. I have seen organizations run diligent and realistic tests, yet still end up with mountains of spaghetti code. I have also seen them turn away many great candidates, who would have certainly given them better code than what they got.

Testing coders seems like it should be easy, but as an industry we are still bad at it.

Why is that?

Predicting is Hard

Perhaps predicting a potential employee's future performance from a job interview is intrinsically very difficult. Perhaps we are not all bad interviewers and bad interviewees; Perhaps making this sort of prediction from a first impression is just prohibitively difficult.

Measuring is Easy

And yet measuring coder performance is relatively easy:

Peer Review

Members of any programming team that has been together for a couple of weeks will know very well how everyone is doing. Programmers know their colleagues' strengths and weaknesses. And they may even be eager to discuss them if you ask.

And yet peer review is still very underutilized, perhaps for fear that it would negatively impact company culture.

But what is the alternative? Not knowing who on your team is coding well? Not helping the struggling coders improve? Just leaving them to underperform indefinitely? Do that for long enough, and your good coders will leave.

Code Review

With modern source control, you can discreetly review each individual programmer's contributions to the code base.

Of course "measuring" by just counting lines of code is trivial - and wrong. But an experienced coder reviewing the contributions can point out problem areas, promote better code quality, etc. This is not trivial, but it is well worth it.

And yet most organizations either have no code reviews, or they only pretend that they do, while in reality they just get their devs to quickly rubber stamp each other's work. This is a tremendous missed opportunity.

Agile works for this Type of Problem

This would not be the first time our industry has struggled by insisting on predicting and planning instead of measuring and adapting.

Agile worked for Project Management

For decades software project management was limited to the waterfall model. This methodology that does well at building new residential subdivisions, usually fails at writing software.

It took industry outsiders - DuPont - to show us the error of our ways.

They taught us that we were failing not because we weren't trying hard enough, but because the task was impossible. They knew that unpredictable processes like discovering a new never-before-seen medication or writing a new never-before-seen piece of software need to be measured and adapted to, not predicted and planned. They lit the way for agile software development.

DuPont may not be a software company, but they could teach us a thing or two about running a business. They've been at it for 200 years.

Agile can also work for Hiring

"But! but! programs and programmers are two very different things! There is no reason to believe that what works for managing one will work for hiring the other."

Except perhaps raw data. We have come a very long way in improving our interviews, but are still having a very tough time trying to predict who will be a good hire. Yet we know that it is possible to accurately measure performance of our coders with a little bit of effort. So why not stop predicting and start measuring?

"But! but! we can't just hire a coder without testing his coding skills!"

Why not? Goldman Sachs does.

They have been hiring programmers without testing them for decades. The experience described by Just Another Scala Quant matches mine. The interview I had with Goldman Sachs in 2000 still stands out as the most pleasant of my entire career. There was no skill test, just two friendly conversations: one with the manager, and one with a fellow developer. And that was it. They extended the offer.

Unlike the Scala Quant, I was young and dumb enough to choose a different offer, but had I gone with Goldman Sachs, it's not hard to imagine what would have followed. They would have monitored and measured my performance, advising me what to improve. If things really weren't working out, they would have let me go, perhaps even during the probationary period that so many companies have, but so few utilize.

And that's it. It works just fine. Zero effort expended on testing coding skills of job candidates. Focus redirected to measuring and improving the performance of hires.

Goldman Sachs may not be a software company, but they can teach us a thing or two about running a business. They've been at it for 150 years.

comments on Disqus